Agentic AI Guardrails for Autonomous Shipping Ops Workflows

Wednesday, 28 Jan 2026

|

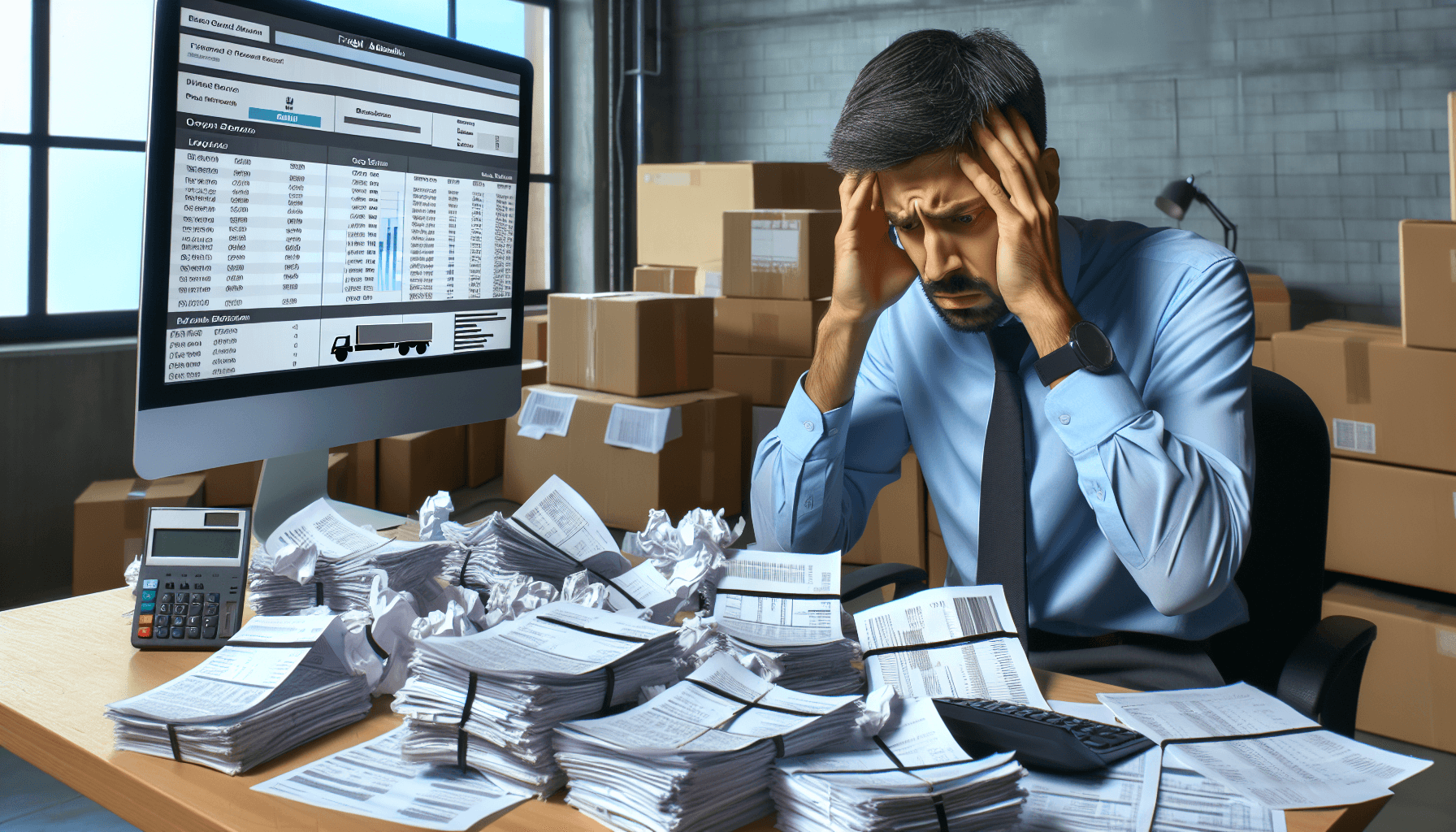

The uncomfortable truth: a lot of “shipping work” is actually error recovery. It looks like productivity, not failure. Teams are busy, trucks roll, orders move, and customers get updates. But the motion often comes from patching gaps created by exceptions, handoffs, and ambiguous ownership.

If you run operations, you already know the feeling: you do not have a labor problem, you have a coordination problem. When people ask for agentic AI or AI agents for operations, the right question is not “can it do the work?” It is “what keeps autonomous workflows from quietly amplifying the wrong work?”

Why competent teams normalize friction

Most logistics teams do not ignore inefficiency because they are complacent. They normalize it because the environment rewards urgency and punishes delay.

Heroics become the system. When a shipment is at risk, the fastest path is usually a person who knows “how it really works.” That person becomes the bridge between TMS, WMS, carrier portals, customer emails, and finance rules. The hero keeps service quality intact, so the organization learns the wrong lesson: that the current operating model is acceptable.

Tribal memory fills in missing runbooks. A runbook exists in fragments: a Slack thread, a bookmark, a note in a ticket, a carrier rep’s phone number. The team carries this context in their heads, not in the workflow. It works until it does not, and then it fails in the worst moment.

Urgency creates blind spots. When an incident happens, the goal is to restore flow, not to improve the system. After the fire is out, everyone moves on. That is rational in the moment, but it means incident triage AI and runbook automation end up being discussed only after a painful quarter.

Tooling fragments accountability. Alerts fire in one place, evidence lives somewhere else, approvals happen in inboxes, and follow-through is manual. Workflow orchestration AI becomes attractive because it promises to connect these. The risk is over-automation: connecting systems without defining boundaries can propagate errors faster than humans ever could.

Symptom checklist you can observe this week

If you are considering autonomous workflows, check for these symptoms first. They indicate that your current process has hidden decisions and unbounded variance.

1) Exceptions are “owned by whoever saw it first.”

2) The same incident triggers different actions depending on shift or site.

3) Teams spend more time proving what happened than fixing what happened.

4) Escalations happen late because people wait for certainty.

5) Small data issues (wrong accessorial, bad address, duplicate shipment) regularly become customer-visible problems.

Quiet math on the real cost of coordination

Do not treat this as a benchmark. Use it as a conservative way to surface where cost-to-serve hides.

Consider a scenario where an operation ships 2,000 orders per day across parcel and LTL, with a small control tower plus site teams.

Assumptions (adjust to your reality):

- Exception rate requiring human touch: 2% of orders (40/day). This could be carrier rejects, tender failures, late pickups, missing paperwork, address issues, mis-rated accessorials.

- Average time spent per exception across triage, evidence gathering, and follow-up: 18 minutes.

- Fully loaded cost of labor for the roles involved (ops, customer service, logistics coordinators): $45/hour.

- Rework multiplier: 25% of exceptions require a second touch due to missing info or handoff.

Illustrative math:

- Base effort: 40 exceptions/day x 18 minutes = 720 minutes/day = 12 hours/day.

- Rework effort: 12 hours/day x 25% = 3 hours/day.

- Total: 15 hours/day.

- Cost: 15 hours/day x $45/hour = $675/day.

- Monthly (22 workdays): about $14,850.

This excludes soft costs: delayed invoicing, carrier detention, credits, and the service quality hit from late or inconsistent updates. Even if your assumptions are half of this, the point holds: coordination work is a recurring tax, and it compounds when volume spikes.

If you want help identifying where this cost hides in your workflows, we run short working sessions to map the top two leak points. The output is a simple view of where decisions are being made implicitly and where bounded autonomy could safely take over.

Where agentic AI goes wrong in shipping ops

Agentic AI is most useful when it can take action, not just summarize. That is also where it can hurt you if you do not install guardrails.

Over-automation risk is mostly boundary failure

The biggest risk is not that an agent makes a mistake. Humans do too. The risk is that the agent can act across systems faster than your organization can notice and stop it.

Common boundary failures in logistics:

- A bot rebooks freight based on a single signal (late pickup) without checking appointment constraints or customer hold instructions.

- A workflow auto-issues credits based on a carrier status that is often wrong during peak.

- An agent “fixes” a rate discrepancy by editing accessorials, then finance disputes increase because the evidence trail is missing.

Error propagation prevention is the design goal

Treat every autonomous workflow as a potential multiplier of small errors. Your guardrails should prioritize containment and reversibility.

Controls that prevent propagation:

- Require evidence, not confidence: the workflow must attach the documents, statuses, and timestamps it used.

- Limit blast radius: cap actions per time window, per lane, per customer, and per carrier.

- Prefer reversible actions: draft, stage, or propose changes before committing when the outcome is not easily undone.

- Build “stop conditions”: if signals conflict, the workflow pauses and requests human input.

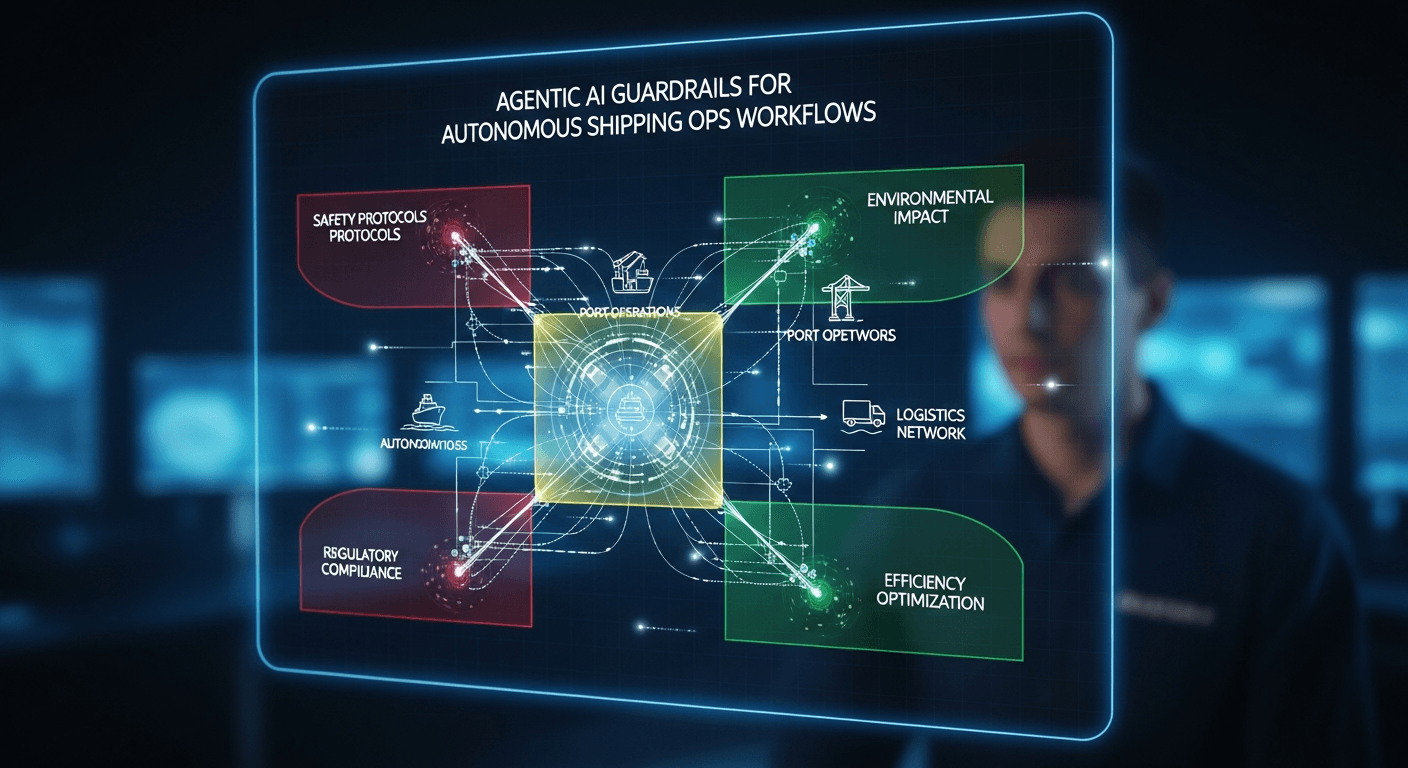

Guardrails that make bounded autonomy real

Bounded autonomy means the agent can act, but only inside rules that match your risk tolerance and service commitments.

Define autonomy tiers by outcome risk

Do not start with “what can the agent do?” Start with “what outcome would be unacceptable?” Then map that back to allowable actions.

Example tiers:

- Tier 0 (Assist): summarize, prefill, suggest next actions. No system writes.

- Tier 1 (Execute low-risk): update internal notes, request documents, send templated customer updates with human-in-the-loop controls on wording.

- Tier 2 (Execute with constraints): re-tender, rebook, or change pickup dates only if specific conditions are met and a rollback path exists.

- Tier 3 (High risk, human approval): accessorial changes, credits, customer commitments, appointment changes, inventory holds.

Human-in-the-loop controls that do not create bottlenecks

Human-in-the-loop should not mean “a person clicks approve on everything.” It should mean humans intervene at the right decision points.

Practical patterns:

- Approval by exception: the workflow proceeds unless it hits a stop condition.

- Dual-channel confirmation: high-impact actions require two independent signals (for example, carrier API plus POD document).

- Escalation routing: the workflow sends the question to the correct role with the evidence already packaged.

Runbook automation requires explicit triggers and exit criteria

Most runbooks fail because they describe steps but not the conditions for starting and stopping.

For each runbook you automate, write:

- Trigger: what event starts it (late scan, tender reject, appointment miss, ASN mismatch).

- Inputs required: what evidence must be present (BOL, PRO, pickup number, customer reference).

- Actions allowed: what systems can be written to.

- Exit criteria: what “resolved” means (new pickup scheduled, customer notified, claim initiated).

- Fallback: what happens if evidence is missing or signals conflict.

Workflow orchestration AI needs an audit trail by default

When an agent takes action, you need to know:

- What it saw (inputs).

- What it decided (rule path).

- What it changed (writes).

- What it asked for (human questions).

- How to undo it (rollback).

If this feels heavy, remember the alternative: you already have an audit trail, it is just spread across inboxes, chats, and tribal memory.

Work about work inside shipping operations

Before you automate, list the “work about work” that keeps flow moving. This is where AI agents for operations often deliver value, but only if the workflow is bounded.

Micro-tasks that consume attention:

- Copying shipment details between TMS, carrier portals, and customer emails

- Checking the same status in multiple places to reconcile “truth”

- Requesting missing documents (BOL, POD, commercial invoice)

- Building proof for claims, disputes, or accessorial challenges

- Updating internal notes so the next shift can continue

Repetitive exception processes worth standardizing:

- Tender reject triage: detect, classify reason, propose alternative carrier, package evidence

- Late pickup sequence: confirm appointment, ping carrier, notify site, update customer based on rules

- Delivery exception triage: hold, reschedule, initiate claim, request POD, update ETA language

- Rate discrepancy loop: capture evidence, route to finance, stage correction, track resolution

Where incident triage AI fits:

- Group related alerts into one incident so teams do not chase symptoms

- Pull the minimal evidence set needed to decide the next action

- Propose the next step from the runbook, with a clear stop condition

A 30-minute exercise to design safe autonomy

Do this with an ops lead, a customer service lead, and someone who understands system permissions.

Step 1 (10 minutes): Pick one exception with frequent recurrence

Choose something that happens daily: tender reject, missed pickup, delivery appointment miss, or missing paperwork.

Write one sentence: “When X happens, we need to achieve Y within Z hours.”

Step 2 (10 minutes): Map the decision points and evidence

List the decisions a person makes, not the clicks.

For each decision, write:

- What evidence is required to decide

- What could go wrong if the decision is wrong

- Whether the action is reversible

Step 3 (10 minutes): Set boundaries and stop conditions

Define:

- The maximum number of actions per hour/day

- The customers, lanes, or carriers excluded initially

- The conflict rules (what signals cause a pause)

- The human-in-the-loop controls (who is asked, how, with what evidence)

By the end, you have the skeleton of bounded autonomy that can be implemented without gambling with service quality.

But we already have automation…

You probably do. Most logistics organizations have scripts, EDI, portal integrations, and alerting. That is necessary, but it often stops at notification and data movement.

Three reasons existing automation still leaves you with coordination tax:

- It automates steps, not decisions. The hard part is deciding what to do when reality does not match the plan.

- It lacks context packaging. People still spend time collecting evidence before they can act.

- It does not enforce exit criteria. Workflows start, but humans have to remember to close loops and confirm outcomes.

If your automation is strong, that is an advantage: it means you already have the events and system hooks. The missing piece is guardrailed decisioning and follow-through so exceptions do not bounce between teams.

At this point, most teams ask the same question: if this isn't a people problem, and it's not solved by more dashboards or alerts - what actually changes the outcome?

Traditional systems are designed to record and notify; the gap shows up where decisions, evidence, and follow-through still depend on human interruption.

What to do next without betting the quarter

Start with one workflow where the cost is visible and the risk is containable. Design for error propagation prevention, not maximum autonomy.

A practical sequence:

- Standardize one runbook with triggers and exit criteria

- Add evidence packaging so humans stop hunting for context

- Introduce bounded autonomy on the lowest-risk actions first

- Add human-in-the-loop controls at the decision points that affect service commitments or money

- Instrument rollback and audit trails before expanding scope

If it makes sense, we can show how teams operationalize the fixes.

https://debales.ai/book-demo?utm_source=blog&utm_medium=content&utm_campaign=agentic-ai-guardrails-for-autonomous-shipping-ops-workflows&utm_content=bounded-autonomy-guardrails