AI Governance Framework for Shipping: Privacy, Audit Trails, Risk

Wednesday, 28 Jan 2026

|

It is uncomfortable to admit, but many shipping operations are already running AI without governance. Not because teams are careless, but because the pressure to move freight turns every shortcut into “temporary,” and temporary becomes permanent. It looks like work, not failure: someone is checking outputs, reconciling exceptions, and cleaning up messes after the fact.

In shipping, the risk is not abstract. It is a misrouted container, a denied claim with missing evidence, a customer escalation because an ETA narrative was confidently wrong, or a compliance review where you cannot reproduce why a decision was made. AI can improve throughput, but without responsible AI logistics practices, it can also create a new class of invisible operational debt.

Why competent teams normalize AI governance gaps

There is a reason smart teams tolerate messy controls around data privacy for AI and ai risk management.

First, heroics work until they do not. A planner notices the model is “off” and manually overrides. A supervisor catches a suspicious recommendation and asks for a second check. The team saves the day, so the system feels “good enough,” even though the process is brittle.

Second, tribal memory substitutes for audit trails. The person who built the prompt chain, tuned the thresholds, or negotiated the vendor contract remembers the caveats. That knowledge lives in chat threads, not in operational documentation. When they are out, the organization loses the why.

Third, urgency creates a bias toward shipping features over controls. It is easy to justify a new automation that reduces touches. It is harder to justify model drift monitoring, prompt injection defense, or structured audit trails ai because those deliver outcomes by preventing future failure, not by creating immediate visible wins.

Finally, compliance in shipping is often event-driven. Controls get tightened after a customer dispute, a regulator inquiry, or a security incident. Until then, everyone is busy and the system keeps running.

Symptom checklist you can observe this week

If AI is already touching customer-facing or compliance-relevant workflows, look for these symptoms:

1) “We cannot explain why” moments: outputs cannot be reproduced, and the decision path is not captured.

2) Manual shadow QA: people routinely verify model results in spreadsheets or email, outside the system of record.

3) Version confusion: no one can quickly tell which model, prompt, or policy was active when an incident occurred.

4) Privacy uncertainty: teams are unsure what data is allowed in prompts, what is stored, and who can access it.

5) Vendor opacity: you rely on a provider but cannot clearly answer where data is processed, retained, and audited.

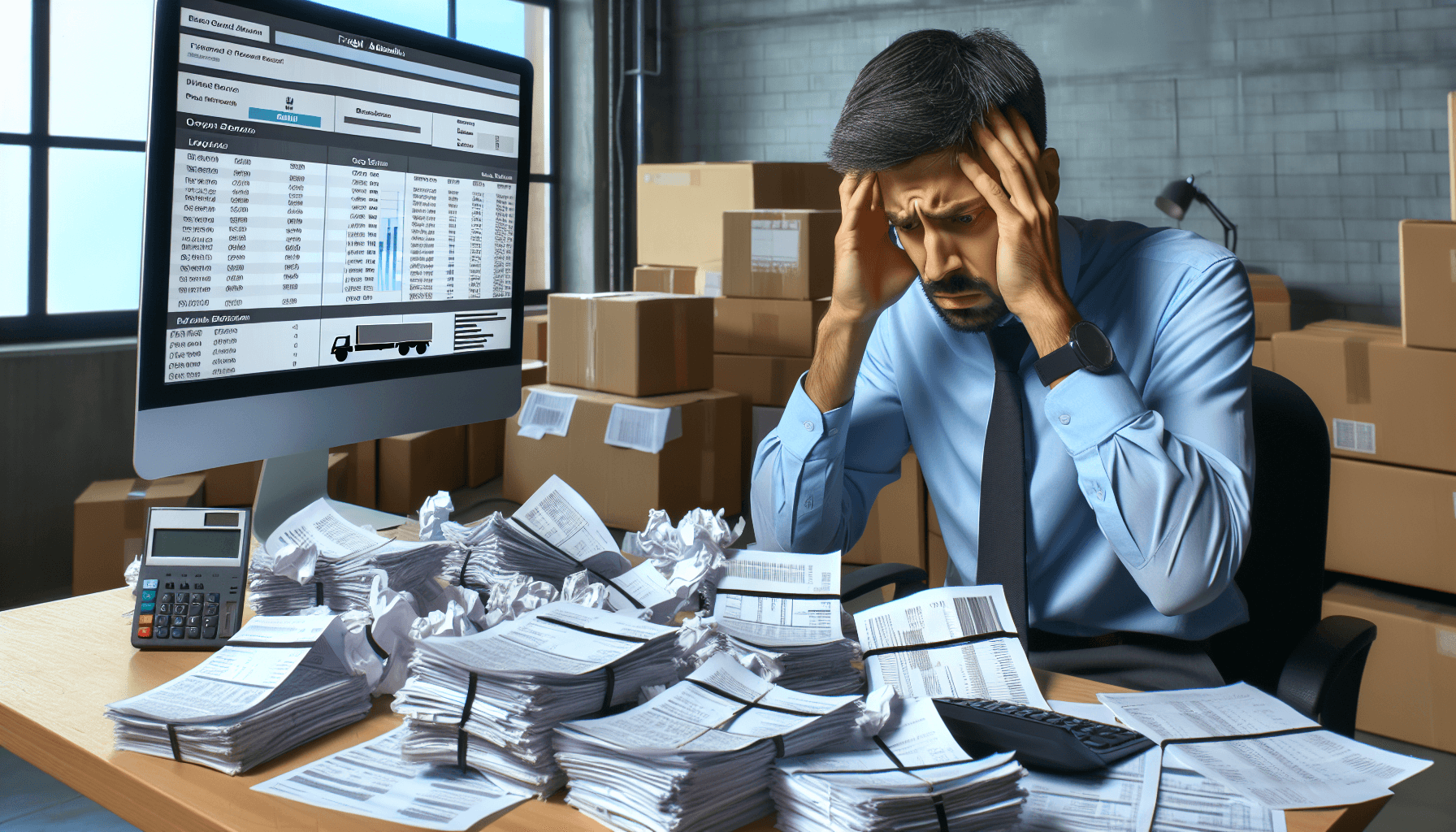

The quiet math: what weak governance costs in operations

Avoiding exaggerated ROI claims is part of responsible practice, so use adjustable assumptions and run your own numbers. Consider a scenario where a mid-sized shipping operation uses AI for ETA narratives, exception triage, document extraction, and customer responses.

Assumption A: Exception volume

Imagine 1,200 shipment exceptions per week that get touched by AI-assisted workflows.

Assumption B: Error and rework rate

Assume a conservative 3% of AI-assisted exceptions lead to rework because of hallucination risk genai, drifted classifications, or missing evidence. That is 36 cases per week.

Assumption C: Time cost per rework

Assume each rework case consumes 25 minutes across operations, customer service, and a supervisor review. That is 900 minutes, or 15 labor hours per week.

Assumption D: Downstream service cost

Now add the hidden cost: if 10 of those 36 cases generate customer escalations, and each escalation takes 40 minutes end-to-end, that is another 6.7 hours.

Assumption E: Compliance and dispute exposure

Finally, assume one case per month becomes a dispute where you need to reconstruct the decision history. If you lack audit trails ai, you might spend 6 hours across teams pulling emails, logs, and screenshots. That is 6 hours per month, plus the risk that you still cannot prove what happened.

Even with cautious numbers, you are looking at roughly 22 hours per week of avoidable work (15 + 6.7), plus periodic bursts for disputes. Convert to your internal loaded labor cost and you will see why governance is operational, not theoretical. The bigger cost is variability: the week you lose three senior people to an audit scramble is the week your throughput drops.

If you want help identifying where this cost hides in your workflows, we run short working sessions to map the top two leak points and define what evidence and controls would remove the rework.

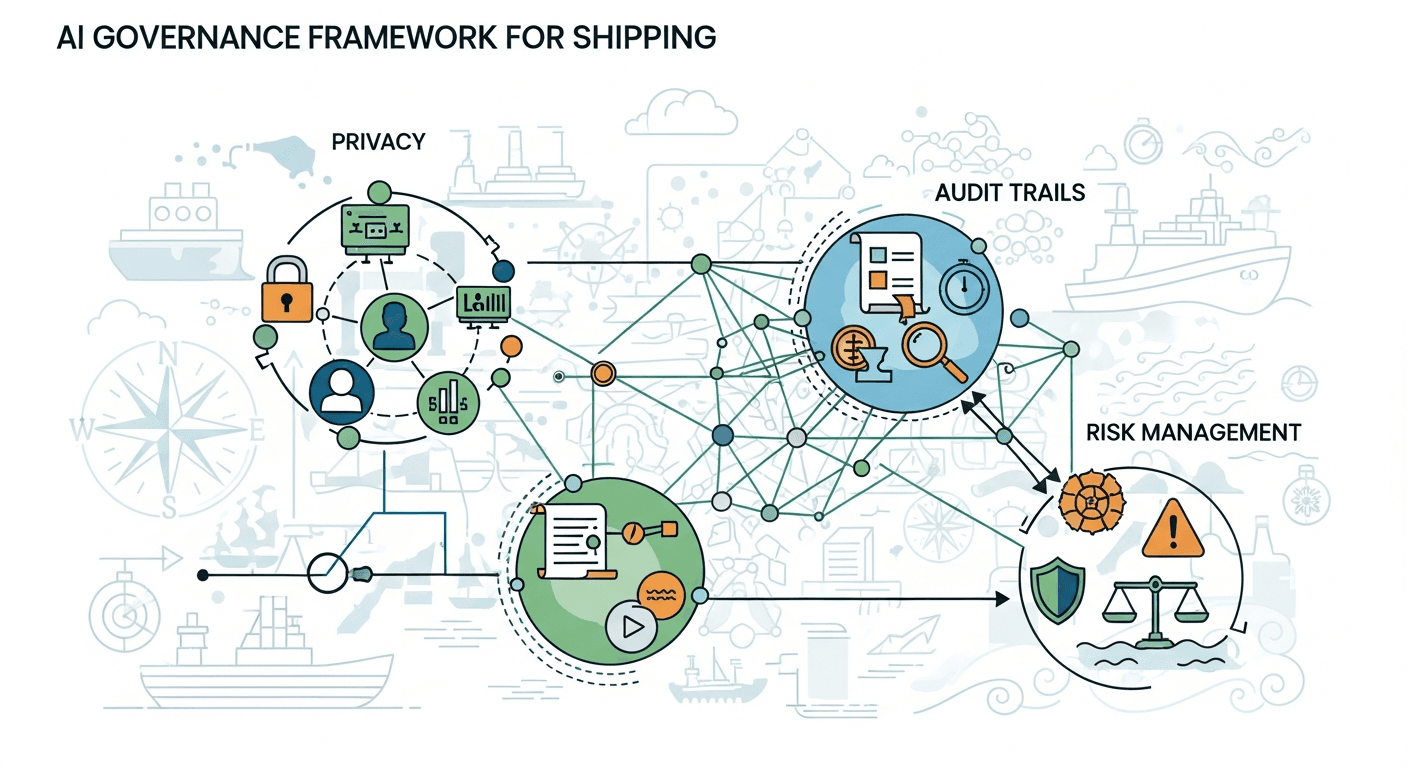

Build an AI governance framework that fits shipping reality

A workable ai governance framework for shipping should be anchored in the decisions that move freight, not in abstract policy documents. Use four pillars: data, decisions, evidence, and vendors.

Data privacy for AI: decide what can enter the model

Start with a clear “allowed data” policy that matches your operating model.

- Define data classes: public, internal, confidential, regulated

- Specify what can be included in prompts and what must be masked or summarized

- Decide retention rules: what is stored, for how long, and where

- Set access boundaries: who can view prompts, outputs, and logs

- Document the “no-go” list: identifiers, contract pricing, claims details, personally identifiable information where not permitted

Operationally, the goal is to remove ambiguity so teams do not improvise under pressure. If people are guessing, you will get inconsistent risk.

Audit trails AI: capture evidence without slowing teams down

Shipping teams need to answer two questions quickly: what did the system recommend, and what did the human do next.

Design audit trails around:

- Input snapshot: key fields used (sanitized where needed)

- Model and prompt version: model name, prompt template ID, and configuration

- Output record: the recommendation or generated text

- Decision capture: accept, modify, reject, with a reason code

- Follow-through: what action was taken in TMS, CRM, or customer channel

Keep it lightweight. Reason codes can be short and operational, like “missing doc,” “customer constraint,” “risk flag,” “override for port congestion.” The point is to preserve decision context.

AI risk management for GenAI and LLM security

If you are using LLMs for customer messages, internal summaries, or triage, focus on three practical risk categories.

1) Hallucination risk genai

- Restrict tasks to bounded outputs (templates, structured fields, controlled vocabularies)

- Require citations to internal records for any factual claim that drives action

- Define a “do not guess” policy: when data is missing, the model must ask for it

2) Prompt injection defense

- Treat inbound text (emails, documents, web forms) as untrusted

- Strip or quarantine instructions embedded in customer content

- Use system-level rules that cannot be overridden by user text

- Add content filters for sensitive requests (rates, access, credential-like content)

3) Model drift monitoring

- Track key metrics tied to operations: misclassification rate, override frequency, escalation rate, and time-to-resolution

- Set thresholds for investigation, not just alerts

- Review drift on a cadence tied to change drivers: seasonal surges, new carriers, new lanes, policy updates

Vendor risk management that matches your compliance obligations

Many AI programs fail governance because procurement checks the contract, but operations cannot validate controls day-to-day.

Make vendor risk management practical:

- Data processing map: where data flows, where it is stored, and who can access it

- Retention and deletion: clear commitments and verification steps

- Incident response: timelines, contacts, and evidence expectations

- Change control: how model updates and policy changes are communicated

- Audit support: what logs, reports, and attestations you can retrieve quickly

If you cannot get evidence within 48 hours, assume you will not get it during a disruption.

Work about work: where governance actually gets implemented

Governance becomes real when it is embedded into micro-tasks. Here are the repetitive processes where controls either exist or fail.

Data handling micro-tasks

- Mask identifiers before prompts are created

- Tag data fields as allowed or restricted for model access

- Route restricted inputs to a non-LLM workflow

- Periodically sample prompts for policy adherence

Decision and evidence micro-tasks

- Record accept or override decisions with a reason code

- Attach the output to the shipment record or case record

- Capture who approved customer-facing messages when confidence is low

- Log exceptions where the model said “unknown” and a human resolved it

Risk monitoring micro-tasks

- Review a weekly set of model outputs for failure modes

- Track override frequency by lane, customer, and exception type

- Investigate spikes in escalations tied to AI-assisted workflows

- Maintain a simple changelog for prompts, policies, and model versions

Security and access micro-tasks

- Rotate API keys and review access lists

- Validate that prompts and logs are not copied into unmanaged tools

- Run a quarterly test for prompt injection defense using sanitized sample inputs

- Confirm vendor controls after any major system upgrade

A practical 30-minute exercise to find your top governance gaps

Do this with an operations lead, a customer service lead, and someone who owns compliance or risk. Timebox it.

Step 1 (10 minutes): Pick one AI-touched workflow

Choose one workflow where AI influences a decision that affects cost-to-serve or service quality. Examples: exception triage, detention prevention outreach, document verification, ETA updates.

Answer in plain terms:

- What decision is being made?

- What is the worst plausible failure?

- Who pays for it: ops time, customer trust, compliance exposure?

Step 2 (10 minutes): Trace the evidence path

Write down what you would need to prove, after the fact, that the decision was reasonable.

- What inputs mattered?

- Where do those inputs live today?

- Where is the AI output stored?

- Where is the human action recorded?

If any link is “in someone’s inbox,” mark it as a gap.

Step 3 (10 minutes): Add one control and one metric

Pick one control you can add without re-platforming.

- Control examples: masking rule, required reason code, confidence threshold for human approval, “no guess” response template

Pick one metric that tells you if the control works.

- Metric examples: override rate, escalations, rework time, missing-evidence cases

Commit to reviewing that metric weekly for four weeks.

But we already have automation…

Automation is not the same as governance. Many shipping teams have RPA scripts, EDI rules, exception dashboards, and standardized SOPs. Those help with repeatability, but AI introduces two new issues:

1) The output can be plausible but wrong.

Rules-based automation usually fails obviously. GenAI can fail convincingly, which shifts the burden to humans to detect errors.

2) The system can change without anyone noticing.

A dashboard does not tell you that the underlying model or prompt behavior drifted. You need model drift monitoring tied to operational outcomes, not just IT uptime.

3) Evidence matters more when decisions are probabilistic.

If a customer disputes a charge or a regulator asks why a decision was made, “the system recommended it” is not defensible without audit trails ai, policy controls, and human decision capture.

At this point, most teams ask the same question: if this is not a people problem, and it is not solved by more dashboards or alerts what actually changes the outcome?

Traditional systems are designed to record and notify. The gap shows up where decisions, evidence, and follow-through still depend on human interruption.

Closing: make governance an operational capability

Responsible ai logistics is less about writing policies and more about making the work traceable, repeatable, and resilient when the pace spikes. Start small: one workflow, one evidence path, one control, one metric. Build from there until privacy, auditability, and vendor controls are routine, not a scramble.

If it makes sense, we can show how teams operationalize the fixes.

https://debales.ai/book-demo?utm_source=blog&utm_medium=content&utm_campaign=ai-governance-framework-for-shipping-privacy-audit-trails-risk&utm_content=privacy-audit-trails