AI Prioritization with the Mess-O-Meter Framework

Tuesday, 18 Nov 2025

|

The Scientific Precision of AI Prioritization: Triangulating Impact, Complexity, and Human Mess

Organizations everywhere are racing to deploy AI. But without a structured, scientific approach to prioritization, most teams end up with long backlogs, wasted investments, and AI projects that never make it into production. The real challenge isn't choosing what could be automated—it's choosing what should be automated first based on measurable, operationally grounded criteria.

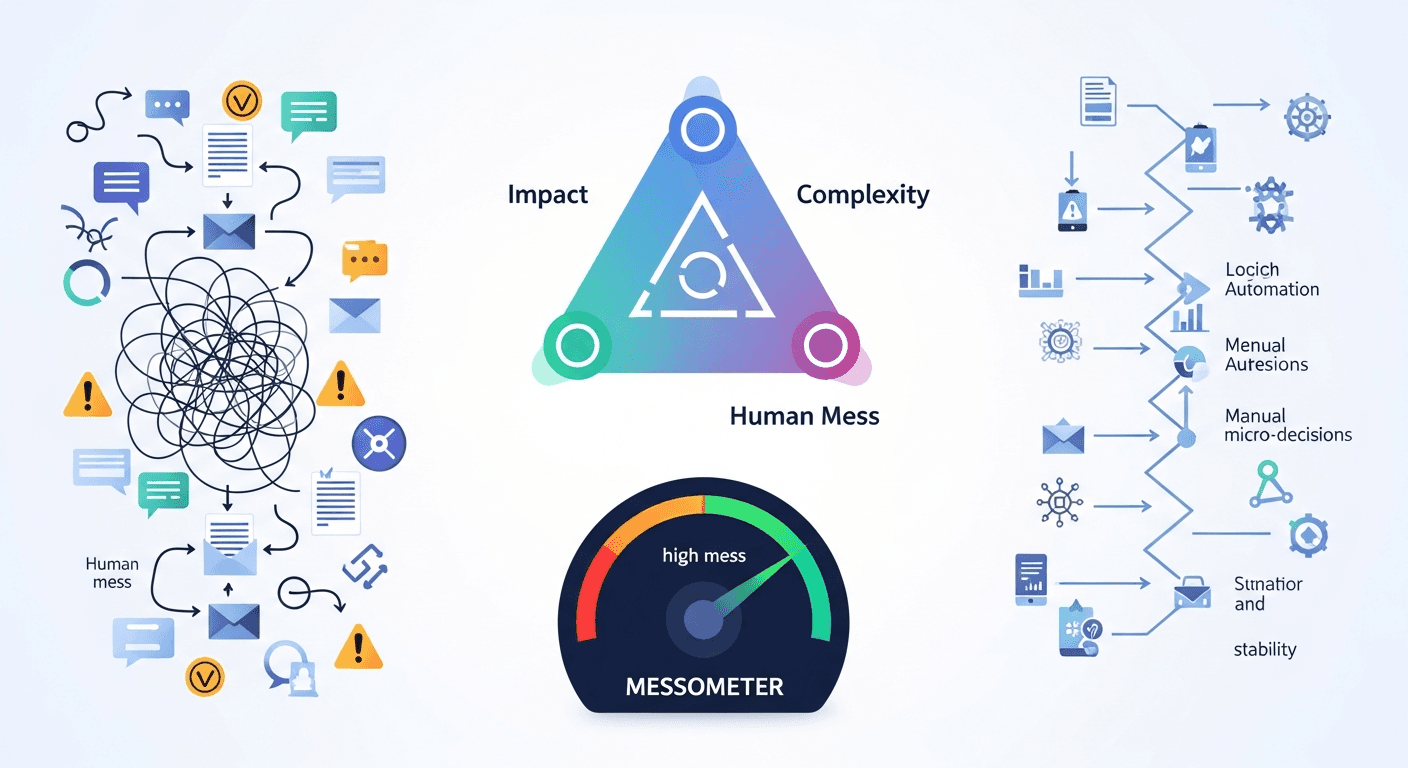

Today, the most reliable method for making those decisions is the Framework for Structured Decisioning and Prioritization, powered by a triangulation model that evaluates Impact, Complexity, and Human Mess. And within that third dimension lies a tool that brings unparalleled clarity: the messometer, a diagnostic instrument that quantifies workflow chaos, conversational friction, and manual micro-decisions.

This article takes a deep dive into how triangulation works—and why human mess may be the most important variable in your entire AI strategy.

Why AI Prioritization Requires Scientific Structure

The Rise of AI Backlogs

AI enthusiasm has created a new organizational problem:

everyone has an idea for automation, and every idea sounds worthwhile.

The result?

- 50–300 unranked AI ideas

- No shared evaluation model

- Competing priorities

- Lack of executive clarity

- Massive delays in AI implementation

Why Unstructured Prioritization Fails

When organizations rely on intuition instead of structure, they consistently prioritize:

❌ Projects that sound exciting but are operationally unstable

❌ Use cases with hidden human complexity

❌ Automations that rely on tribal knowledge

❌ AI that gets stuck because processes aren’t mature

A scientific model prevents these mistakes.

The Framework for Structured Decisioning and Prioritization

The Triangulation Model

A reliable prioritization framework must go beyond ROI and feasibility. True predictability comes from evaluating each use case across three axes:

Impact: measurable, meaningful outcomes

Complexity: technical and operational difficulty

Human Mess: hidden workflow friction that destroys AI reliability

Data Sources That Feed Prioritization

To score accurately, organizations combine:

- Operational metrics

- Process documentation

- SME interviews

- messometer assessment data

- Exception logs

- Cycle time patterns

The richer the data, the sharper the prioritization.

Understanding the Three Axes: Impact, Complexity, and Human Mess

Impact Metrics

Impact is evaluated using factors like:

- Cost reduction

- Cycle time compression

- Error elimination

- Revenue uplift

- Employee workload relief

High-impact use cases are always tempting—but impact alone is not enough.

Technical and Operational Complexity

Complexity includes:

- Data readiness

- System integrations

- Exception frequency

- Workflow variability

- Regulatory constraints

High complexity lowers priority unless the benefit is extraordinary.

Hidden Human Mess Revealed by the messometer

This is the most common blind spot.

Human mess is typically invisible without measurement, making the messometer the critical missing tool.

Using the messometer to Quantify Human Mess

Measuring Manual Micro-Decisions

The messometer identifies repeated tiny decisions employees make:

- “Should I route this here or there?”

- “Do I need approval?”

- “Is this exception valid?”

AI cannot succeed without standardizing these.

Tracking Conversational Friction

The messometer exposes:

- Clarification loops

- Missing context

- Unclear requirements

- Reply delays

- Decision reinterpretation

These friction points are silent AI killers.

Identifying Workflow Chaos Patterns

Patterns often reveal:

- Conflicting pathways

- Inconsistent outcomes

- Unpredictable task flow

- High cognitive load

When human mess scores are high, AI prioritization must adjust.

Why Human Mess Is the Most Underrated Prioritization Variable

The Invisible Effort Tax

Employees spend a surprising amount of time just figuring things out.

None of this appears in system logs.

How Human Variation Breaks AI

If 10 people complete the same task in 10 different ways, AI cannot learn reliably.

When High Human Mess Creates High ROI

Sometimes, messy processes are prime candidates for automation because they:

- Consume excessive time

- Cause delays

- Generate errors

- Frustrate teams

But only if impact outweighs complexity.

Triangulating for Precision: Combining All Three Axes

This is where the scientific methodology becomes powerful.

Weighted Scoring Model

Each dimension receives a weighted value reflecting organizational strategy.

For example:

- Impact = 50%

- Complexity = 30%

- Human Mess = 20%

Prioritization Quadrants

Use cases fall into clear categories:

- High Impact / Low Complexity → Automate first

- High Impact / High Mess → Standardize then automate

- Low Impact / High Complexity → Deprioritize

- Low Mess / Medium Impact → Efficiency wins

Scientific Ranking Method

The triangulation engine ranks all use cases from most to least viable with numerical scores.

Case Example: How Triangulation Reduces AI Failure Risk

Before Triangulation

A company prioritized a high-impact onboarding automation.

It failed repeatedly.

After messometer Measurement

The messometer revealed:

- 39 micro-decisions

- Highly inconsistent task flow

- Frequent verbal clarifications

- Unpredictable exception paths

Resulting Deployment Success

Once standardized, the AI accuracy went from 28% → 91%.

Building an AI Prioritization Map Your Executives Can Trust

Visualizing the Portfolio

Executives see a clear chart showing each use case plotted on:

- Impact

- Complexity

- Human Mess

Identifying Low-Complexity / High-Impact Wins

These accelerate AI momentum.

Avoiding High-Complexity / High-Mess Traps

These projects derail teams for months.

The Role of Operational Readiness in AI Prioritization

Why AI Needs Process Stability

Chaos in → chaos out.

How the messometer Establishes Readiness Scores

A high messometer score signals the need for process work before deployment.

Turning Prioritization into an Iterative Scientific Process

Feedback Loops

Scores update as conditions change.

Continuous Measurement

The messometer provides real-time improvement visibility.

Updating Scores with New Data

Each iteration sharpens prioritization accuracy.

Executive Decisioning: How to Present Findings for Approval

Clear Prioritization Narratives

Executives see the logic behind the ranking.

Evidence-Based Recommendations

Triangulated data eliminates emotional decisioning.

See your Mess-O-Meter in minutes.

FAQs About the Framework and the messometer

1. What makes this prioritization model scientific?

It uses measurable data from three independent axes.

2. Why is the messometer necessary?

It reveals hidden complexity in human-driven workflows.

3. How often should messometer scoring be refreshed?

Every 3–6 months or after major workflow changes.

4. Can high-mess processes still be automated?

Yes with standardization work first.

5. How does triangulation reduce AI failure?

It prevents teams from choosing AI-unfriendly use cases.

6. Where can I learn more about decisioning frameworks?

Visit: https://hbr.org

Conclusion

AI prioritization is no longer a guessing game.

Using scientific triangulation Impact, Complexity, and Human Mess—and leveraging the messometer, organizations can rank AI opportunities with precision, confidence, and clarity.

This framework transforms AI planning from intuition to science.